NIO is a real-life HAL, already making positive impact. What else can we learn from cinema’s most misunderstood AI?

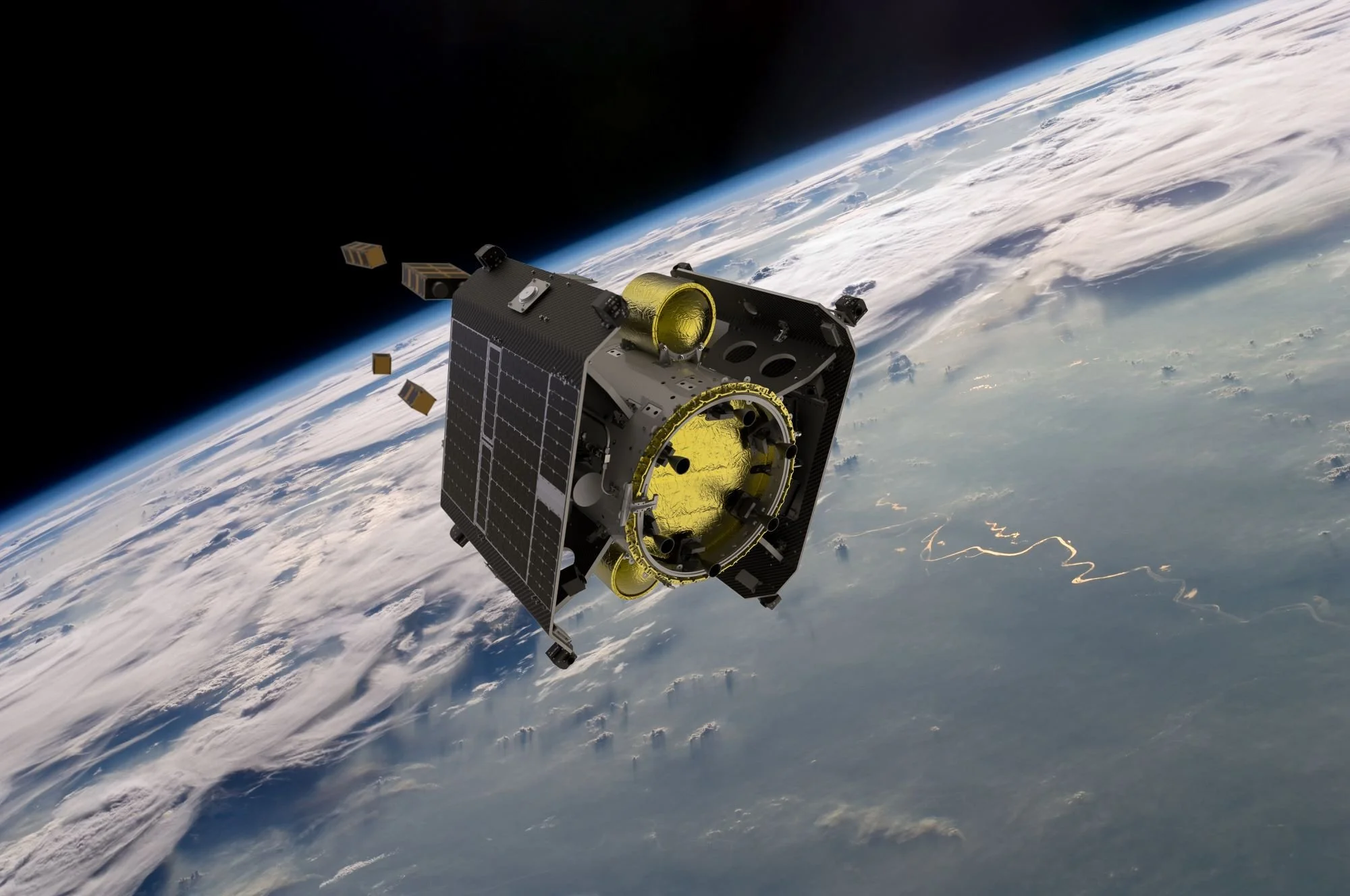

NIO.space (Networked Intelligence in Orbit) is our mission to develop Earth’s most advanced AI-enhanced hybrid observation platform for decision intelligence in space.

The goal of NIO.space is to deploy intelligent cyberinfrastructure for space situational awareness, disaster warning and response for enhanced Earth system predictability and planetary stewardship.

NIO.space is a global leader in intelligence on-board spacecraft, and with our partners, ESA Phi-Lab, NASA, D-Orbit, Unibap and Oxford University we have demonstrated numerous firsts in orbit, including the first flood segmentation, the first re-training of an ML payload (for EO applications), the first ML-retrieval of methane plumes, the first federated compute between ground and orbit and the first migration of an AI between instruments in space.

The end-game is networked edge intelligence in space, orchestrated with terrestrial foundation models all working in concert. We are already seeing that this combination will be vastly more powerful than any component in isolation.We call this intelligent cyberinfrastructure a ‘Live Twin’.

World breakthrough in onboard AI model training presented by Φ-lab at IGARSS

ESA explores cognitive computing in space with FDL breakthrough experiments